Relearning the Metropolis-Hastings Algorithm

Markov Chain Monte Carlo (MCMC) is a family of methods for efficiently sampling from an arbitrary probability distribution. The Metropolis-Hastings algorithm is a specific example of MCMC, which we’ll be summarizing in this post. First, however, let’s justify the need of these sampling algorithms.

Sampling is hard, sometimes

Informally, the problem of sampling a distribution is usually posed as:

- We are given some black-box algorithm for generating uniformly random numbers from the interval , which we can use as many times as we like.

- We are given some function which we call a probability distribution, where the integral of over the entire domain is equal to .

- Using these, we want to create an algorithm such that the distribution of samples generated by this algorithm approximates arbitrarily close as the number of samples increase..

For example, suppose we want to generate random samples from the normal distribution . We’re able to use the Box-Muller Transform to come up with a sampling method:

- Generate random numbers and from the uniform distribution on .

- Output . Using only our black-box algorithm for generating random numbers between 0 and 1, we’re able to produce an algorithm where the probability distribution of equals the normal distribution.

Rejection sampling

In the previous example, we had a nice, clean sampling method which resulted from the mathematical properties of the normal distribution. However, this isn’t always the case – consider, for example, the normal distribution with the additional condition that the value must be at least 1. We’re no longer able to directly use the Box-Muller Transform, but there’s a way to get around this:

- Use the Box-Muller Transform to sample a value .

- If , output . Otherwise, return to Step 1. This method of sampling a wider distribution, then accepting or rejecting samples based on a condition, is known as Rejection sampling. While rejection sampling methods always require a non-deterministic amount of time, rejection sampling is often fast and simple, and as a result is frequently useful for sampling conditional distributions.

Now consider the normal distribution , except with the additonal condition that the value must be at least 5. Given a single sample of the normal distribution, the probability that the condition holds true is about 1 in 5 million. As a result, using rejection sampling in the same fashion as the previous example isn’t viable, since it’s too costly in terms of runtime. This is where MCMC methods come in.

The Metropolis-Hastings algorithm

The Metropolis-Hastings algorithm is able to sample an arbitrary probability distribution given access to two things:

- The previously mentioned uniform distribution sampler

- A function proportional to the distribution .

Using the sampler, we also need to supply the algorithm with a sampling method for some distribution , where is symmetric: . One commonly used distribution (which we’ll be using) is . Now that we specified everything that we need, the Metropolis-Hasting algorithm, as taken from Wikipedia, works as follows:

- Pick some element to be a starting point.

- Sample a candidate from the distribution .

- Calculate the acceptance ratio .

- Generate a random number from the uniform distribution on . a. If , becomes the newest sample: append to the list of samples and set . b. If , nothing happens.

- Return to Step 2.

A few things are worth noting: the latest sample is always accepted when and . As a result, the value tends to move towards higher-probability regions. In addition, the Metropolis-Hastings algorithm is often called a random walk MCMC due to how randomly changes in direction every iteration. Finally, the initial choice of starting point and choice of greatly affects how many iterations we need to closely approximate . For example, in our previous distribution of and , the combined choice of starting point and distribution would require multiple iterations before the algorithm finally reaches a point where is nonzero.

There’s another problem with Metropolis-Hastings: consider the distribution with the additional condition . Without a sufficiently high-variance choice of distribution , the algorithm will be forever stuck in one of the two disjoint areas of nonzero probabilities. As a result, it’s pretty difficult for Metropolis-Hastings to accurately sample this entire distribution without manual modifications.

Implementation

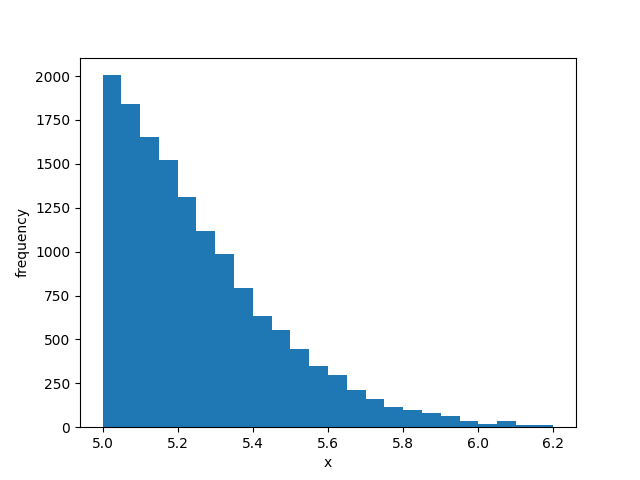

You can find my implementation of Metropolis-Hastings here, using the previously mentioned normal distribution with as an example. Iterating 100,000 times, we’re able to obtain the resulting distrbution of samples: