T-distributed Stochastic Neighbor Embedding (t-SNE, van der Maaten and Hinton ‘08), is a dimensionality reduction technique commonly used for data visualization. In this post I’ll summarize how Stochastic Neighbor Embedding (SNE) works, which t-SNE is built off of. I’ll also implement SNE on the MNIST dataset. In a later post, I’ll summarize this wonderful post on distill.pub, and then implement t-SNE and test in on a couple of datasets.

Stochastic Neighbor Embedding

t-SNE shares its main idea with Stochastic Neighbor Embedding (SNE, Hinton and Roweis ‘02). SNE works with a mapping of the original high-dimensional points to points in a low-dimensional space. To evaluate how well the mapped points resemble the same structure as points , SNE does the following:

- SNE first computes measures of similarity between the original points. For this, SNE uses conditional probabilities to denote the probability of selecting point as a neighbor of . Note that these probabilities are drawn from the Gaussian distribution with . Assume that is given for now – we’ll determine from a parameter called perplexity, to be explained later.

- Next, SNE computes the same conditional probabilities but on the low-dimensional points . We have that .

- If the pairwise similarity of points resemble that of the original points , then the conditional probability distributions and will be similar as well. Therefore, as a measure of how well points model the similarities in points , we use the sum of the KL-divergences .

To summarize, we create conditional probability distributions to model the pairwise similarity in both the low-dimensional points and high-dimensional points. When the two distributions are similar, the low-dimensional points appropriately models the similarity in points . As a result, we will optimize the KL-divergence between the two conditional probability distributions.

It’s worth noting that because KL-divergence is not symmetric, SNE also possesses asymmetric properties – KL-divergence assigns high cost when and are far but and are close, but it assigns a small cost for the opposite.

Deriving the SNE Update Rule

We have a cost function . The goal of SNE is to find points which minimize this cost. To do this, we will compute the gradient . For the purpose of my own understanding, I worked out all the algebra for the gradient:

1) We have .

2) , because is independent from . As a result, we have

$$\displaystyle \frac{\partial C}{\partial y_i} = \frac{\partial}{\partial y_i}\sum_{j, k} (p_{k \vert j} \log \frac{1}{q_{k\vert j}}) = \sum_{j,k} (-\frac{p_{k \vert j}}{q_{k \vert j}}\frac{\partial q_{k \vert j}}{\partial y_i})$$.

3) This sum can be separated into two components:

$$\displaystyle\frac{\partial C}{\partial y_i} = \sum_j (-\frac{p_{j\vert i}}{q_{j\vert i}}\frac{\partial q_{j\vert i}}{\partial y_i} - \frac{p_{i\vert j}}{q_{i\vert j}}\frac{\partial q_{i\vert j}}{\partial y_i}) + \sum_{j, k \neq i} (-\frac{p_{k \vert j}}{q_{k \vert j}}\frac{\partial q_{k \vert j}}{\partial y_i})$$.

4) Now we evaluate the partial derivatives. For :

$$\displaystyle q_{j\vert i} = \frac{\exp (-\vert\vert y_i - y_j \vert\vert^2)}{\sum_{k\neq i} \exp (-\vert\vert y_i - y_k \vert\vert^2)} \\

\frac{\partial q_{j\vert i}}{\partial y_i} = \frac{\frac{\partial}{\partial y_i} \exp (-\vert\vert y_i - y_j \vert\vert^2)}{\sum_{k\neq i} \exp (-\vert\vert y_i - y_k \vert\vert^2)}-\frac{\exp (-\vert\vert y_i - y_j \vert\vert^2)\frac{\partial}{\partial y_i}(\sum_{k\neq i} \exp (-\vert\vert y_i - y_k \vert\vert^2))}{(\sum_{k\neq i} \exp (-\vert\vert y_i - y_k \vert\vert^2))^2} \\

= q_{j\vert i} 2(y_j - y_i) - q_{j\vert i}\frac{\frac{\partial}{\partial y_i} \sum_{k\neq i} \exp (-\vert\vert y_i - y_k \vert\vert^2))}{\sum_{k\neq i} \exp (-\vert\vert y_i - y_k \vert\vert^2))} \\

= q_{j\vert i} 2(y_j - y_i) - q_{j\vert i}\frac{\sum_{k\neq i} \exp(-\vert\vert y_i - y_k\vert\vert^2) 2(y_k - y_i)}{\sum_{k\neq i} \exp (-\vert\vert y_i - y_k \vert\vert^2))} \\

= q_{j\vert i} 2(y_j - y_i) - q_{j\vert i} \sum_{k\neq i} q_{k\vert i}2(y_k - y_i)$$.

5) For :

$$\displaystyle q_{i\vert j} = 1 - (\sum_{k\neq i, j} \exp (-\vert\vert y_j - y_k \vert\vert^2))\frac{1}{\sum_{k\neq i} \exp (-\vert\vert y_j - y_k \vert\vert^2)}\\ \displaystyle\frac{\partial q_{i\vert j}}{\partial y_i} = \frac{\partial q_{i\vert j}}{\partial y_i} = - (\sum_{k\neq i, j} \exp (-\vert\vert y_j - y_k \vert\vert^2)) \frac{-1}{(\sum_{k\neq j} \exp (-\vert\vert y_j - y_k \vert\vert^2))^2}\frac{\exp (-\vert\vert y_i - y_j\vert\vert^2)}{\partial y_i}\\

\displaystyle = \frac{1}{\sum_{k\neq j} \exp (-\vert\vert y_j - y_k \vert\vert^2)}\frac{\sum_{k\neq i, j} \exp (-\vert\vert y_j - y_k \vert\vert^2)}{\sum_{k\neq j} \exp (-\vert\vert y_j - y_k \vert\vert^2)} \exp (-\vert\vert y_j - y_i\vert\vert^2) 2(y_j - y_i) \\

= 2 q_{i\vert j} (1 - q_{i\vert j}) (y_j - y_i)$$.

5) For , :

$$\displaystyle q_{k\vert j} = \exp (-\vert\vert y_j - y_k\vert\vert^2)\frac{1}{\sum_{l\neq j} \exp (-\vert\vert y_j - y_l\vert\vert^2)} \\

\frac{\partial q_{k\vert j}}{\partial y_i} = \exp (-\vert\vert y_j - y_k\vert\vert^2)\frac{-1}{(\sum_{l\neq j} \exp (-\vert\vert y_j - y_l\vert\vert^2))^2}\frac{\partial \exp (-\vert\vert y_j - y_i\vert\vert^2)}{\partial y_i}\\

= \exp (-\vert\vert y_j - y_k\vert\vert^2)\frac{-1}{(\sum_{l\neq j} \exp (-\vert\vert y_j - y_l\vert\vert^2))^2}\exp (-\vert\vert y_j - y_i\vert\vert^2) 2(y_j - y_i) \\

= q_{k\vert j} \frac{-\exp (-\vert\vert y_i - y_j\vert\vert^2)}{\sum_{l\neq j}\exp (-\vert\vert y_j - y_l\vert\vert^2)}2(y_j - y_i)\\

= -2q_{k\vert j}q_{i\vert j}(y_j - y_i)$$

6) Reusing the sum from Part (3), we have

$$\begin{align*}

\displaystyle\frac{\partial C}{\partial y_i} &= \sum_{j\neq i} (-\frac{p_{j\vert i}}{q_{j\vert i}}\frac{\partial q_{j\vert i}}{\partial y_i} - \frac{p_{i\vert j}}{q_{i\vert j}}\frac{\partial q_{i\vert j}}{\partial y_i}) + \sum_{j, k \neq i} (-\frac{p_{k \vert j}}{q_{k \vert j}}\frac{\partial q_{k \vert j}}{\partial y_i}) \\

&= \sum_{j\neq i} (-2p_{j\vert i} ((y_j - y_i) - \sum_{k\neq i} q_{k\vert i} (y_k - y_i)) - 2p_{i\vert j}(1 - q_{i\vert j})(y_j - y_i)) + \sum_{j, k\neq i} (2p_{k \vert j}q_{i\vert j}(y_j - y_i)) \\

&= -2\sum_{j\neq i} (y_j - y_i)(p_{j\vert i} + p_{i \vert j}(1 - q_{i\vert j})) + 2\sum_{j, k\neq i}p_{j\vert i}q_{k\vert i}(y_k - y_i) + \sum_{j, k\neq i} 2p_{k\vert j}q_{i\vert j} (y_j - y_i).\end{align*}$$

Taking advantage of the facts that and , we have

$$\begin{align*}

\frac{\partial C}{\partial y_i} &= -2\sum_{j\neq i} (y_j - y_i)(p_{j\vert i} + p_{i \vert j}(1 - q_{i\vert j})) + 2\sum_{k\neq i}q_{k\vert i}(y_k - y_i) + \sum_{j\neq i} 2(1 - p_{i \vert j})q_{i\vert j} (y_j - y_i)\\

&= 2\sum_{j\neq i} (y_i - y_j)(p_{j\vert i} + p_{i\vert j}(1 - q_{i\vert j}) - q_{j\vert i} - (1 - p_{i\vert j})q_{i\vert j}) \\

&= 2\sum_{j\neq i} (y_i - y_j)(p_{j\vert i} + p_{i\vert j} - q_{i\vert j} - q_{j\vert i}).\end{align*}$$

Perplexity

Hinton and Roweis (in the original SNE paper) introduce perplexity as a means of determining the values of . As a result, perplexity specifies to what degree points are considered the ‘neighbors’ for some point . It does so as follows:

- For a fixed value of , we have a corresponding distribution .

- Consider the Shannon Entropy of . That is, .

- When is very small, the closest point to dominates the distribution, and . As increases, the probabilities of all points become closer to each other and the Shannon Entropy increases.

Perplexity determines our choice of by making us select . Because is an increasing function on , there exists a unique solution for for a given value of perplexity, which can be found using binary search.

Miscellaneous

Hinton and Roweis initialize the algorithm by randomly sampling from an isotropic Gaussian distribution. Also, momentum is used in the gradient descent algorithm for better performance. From the original paper:

$$\mathcal{Y}^{(t)} = \mathcal{Y}^{(t-1)} - \eta\displaystyle\frac{\partial C}{\partial \mathcal{Y}} + \alpha (t) (\mathcal{Y}^{(t - 1)} - \mathcal{Y}^{(t-2)}).$$

Implementation on MNIST

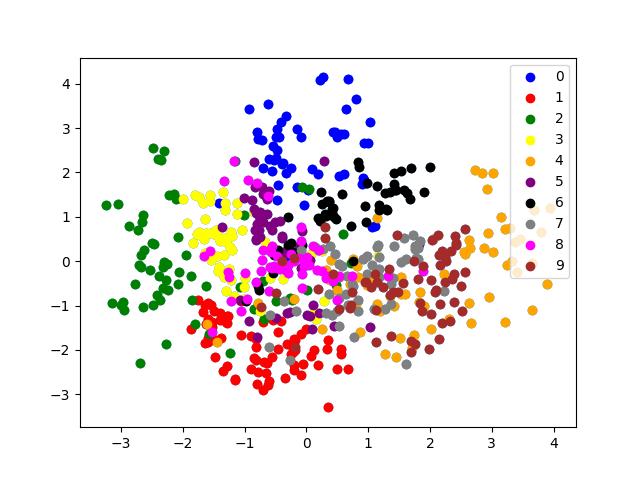

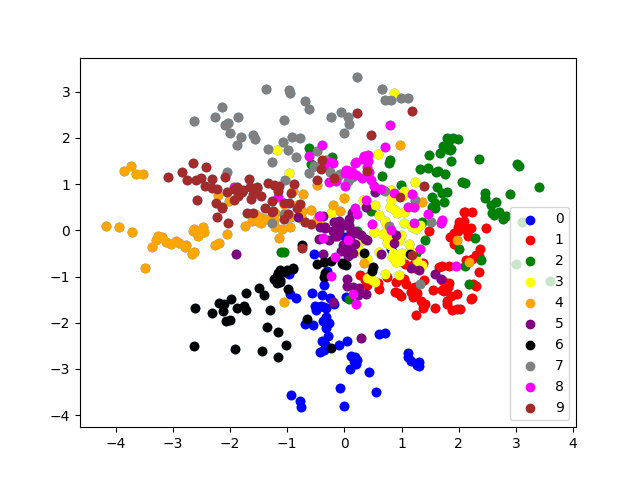

My implementation of SNE can be found here. To test my implementation, I ran it on the MNIST dataset (600 points, perplexity 20, 1000 iters). We get some pretty colorful graphs (these are for two separate runs of SNE):

It seems that SNE is somewhat effective at grouping images with the same labels together. In addition, classes which look similar are close together: the ‘4’ cluster is close to the ‘9’ cluster in both runs, as is the ‘3’ cluster and ‘8’ cluster. On the other hand, classes such as ‘2’ and ‘4’ which are very not-alike seem to be far apart.