Implementing Variational Inference

Previously, I described the purpose and method of variational inference – a means to learn a distribution’s latent variables by finding a proxy distribution for the latent variables and maximizing the Evidence Lower Bound (ELBO). Now, we’ll discuss how we can maximize the ELBO, and then implement what we’ve learned.

Mean Field Variational Inference

Mean Field Variational Inference is a specific instance of variational inference in which we choose a that factorizes. That is,

. In other words, each variable is independent. Now let’s use some math to simplify the ELBO. Recall:

Expressing the joint probability in terms of conditional probabilities,

Because factorizes, we also have:

Substituting both of these into the ELBO, we get

Consider the ELBO as a function of . By rearranging how we express in conditional probabilities, we can express the ELBO as

where does not depend on and is therefore a constant term. Now, given a fixed distribution for , we can derive the optimal . Re-expressing the expectations, we note that

and therefore

When we consider that , it turns out that we have a optimization problem which allows us to use the integral version of Lagrange multipliers. For those unfamiliar, details about using integral constraints can be found here and here, and may be covered in this blog at a later date. In essence, for some constant , the Lagrangian formulation leads to the condition

Because and is constant relative to ,

Assuming that the normalized distrbution of is computable, we now have a means for computing the optimal distribution of when the other variables are fixed. How do we use this?

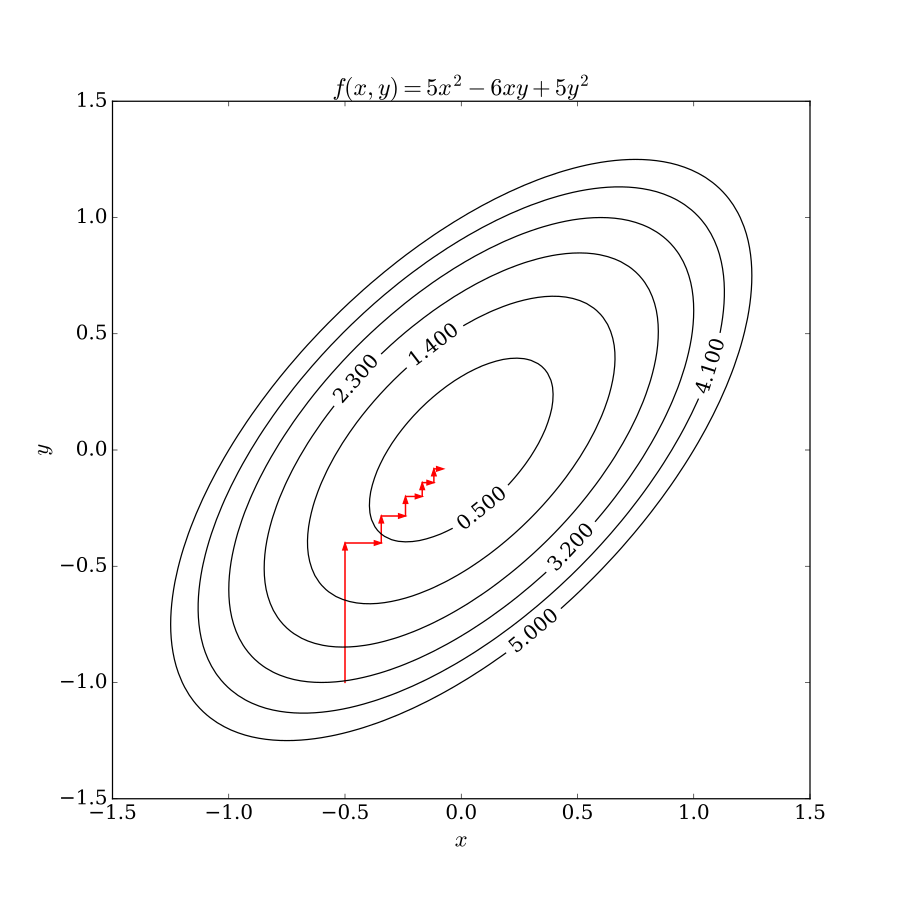

Coordinate Ascent

The coordinate ascent method allows us to converge to a local optimum. We iterate through each latent variable , finding the optimum distribution when the other variables are fixed. An idea of how coordinate descent works on two parameters can be found here:

Implementation

Let’s revisit the Bayesian Mixture of Gaussians from the previous post:

- Generate from .

- Uniformly randomly select from the interval .

-

For :

a. Generate from .

b. Generate from .

Under mean field variational inference, we choose a family where the distribution of is a Gaussian with parameters and the distribution of every contains only two probabilities, and . Each variable is independent, and therefore factorizes and requirement for mean field variational inference holds. From our previous derivations in the Mean Field Variational Inference section, we can obtain the following update rules for the variables in :

Please note that we skipped all of the steps required to compute these two rules, which can be found in Section 8 of this. From here, it’s a simple matter of having a reasonable initialization of the variational parameters in , followed by alternating through the update rules for for coordinate ascent.

An implementation of mean field variational inference on a Bayesian Mixture of Gaussians can be found here, which uses these two update rules.